In this post ...

Lessons from Bringing AI into the M&A Arena The Science Behind the Illusion: What GenAI Really Does Why GenAI Hallucinates — The Structural Issue Why This Is a Problem in M&A Conclusion: The Answer Is Not Always the Truth — At Least Not Yet Stay tuned.The Science Behind the Illusion: What GenAI Really Does

Generative AI models like ChatGPT, Claude, or LLaMA are built on transformer architectures and are trained on billions of sentences from books, websites, articles, and forums. These models do not "learn facts" in the way humans do. Instead, they model patterns in language—the probability that one sequence of words follows another.

Technically speaking, when you ask a question, the model is:

-

Not querying a knowledge base (like a database would).

-

Not reasoning logically (like a human expert might).

-

But instead predicting the next most likely token (word or subword) given the input prompt, based on patterns it has statistically inferred from training data.

This is why it’s called “language modeling,” not “fact modeling.”

And it’s the root cause of hallucinations.

Let’s Unlock Next Gen M&A Together.

Contact UsWhy GenAI Hallucinates — The Structural Issue

Since GenAI is driven by probability rather than fact-verification, it cannot distinguish between information that is accurate and information that just sounds right. It treats both the phrase “Amazon acquired Whole Foods in 2017” and “Amazon acquired Trader Joe’s in 2019” as equally viable completions — unless its training data had stronger exposure to the former.

Moreover, when the model encounters:

- Gaps in training data,

- Niche domain-specific prompts (e.g., a specific clause in an M&A agreement),

- Or syntactically well-formed but factually impossible inputs,

it does not respond with “I don’t know.” Instead, it fills in the gap with the most statistically plausible text — confident, articulate, but often fabricated.

This problem is magnified in specialized contexts like M&A, where facts, numbers, and terminology are complex, dynamic, and high-consequence.

Why This Is a Problem in M&A

In day-to-day M&A execution, professionals must navigate highly structured data, nuanced legal clauses, and rapidly evolving market and regulatory environments. The growing use of GenAI — for tasks like document analysis, auto-generated summaries, and predictive modeling — adds efficiency, but it also introduces new forms of risk.

Here’s the core issue: when GenAI is used to process or generate M&A-critical content, it can easily infer, misattribute, or fabricate information — and still present the result with perfect fluency and confidence.

This can lead to:

- Misguided deal decisions based on fictitious or wrongly inferred assumptions.

- Flawed summaries of complex legal, tax, or regulatory disclosures — misleading due diligence stakeholders.

- Synergy or risk projections that sound logical but have no basis in actual operating data.

- Generated due diligence reports that appear well-structured but embed hallucinated claims.

- AI-powered document analysis that misclassifies key clauses or misses material risks in contracts.

- Strategic briefings that look professionally drafted but are built on unreliable inputs or correlations.

These are not theoretical edge cases. We’ve observed such behaviors across GenAI tools, especially when they operate outside their trained domain — such as with private company data, jurisdiction-specific legal standards, or niche sector metrics.

And the challenge compounds under pressure. In fast-paced deal cycles, teams may accept AI outputs at face value. Without robust human verification, these hallucinations can slip through — leading to flawed decisions, broken narratives, or reputational exposure.

Want Smarter M&A Insights? — Subscribe Now.

Sign up

Conclusion: The Answer Is Not Always the Truth — At Least Not Yet

GenAI is a linguistic powerhouse, capable of generating everything from draft reports to strategic overviews in seconds. It’s reshaping how work gets done in virtually every knowledge industry — and M&A is no exception.

But its biggest flaw remains hidden in its fluency: GenAI is built to produce answers, not truths. It doesn’t understand what it says. It doesn’t know what it doesn’t know. It generates responses that are statistically plausible — not necessarily factually reliable.

And in M&A — where decisions are worth millions, and where facts must hold up under legal, financial, and strategic scrutiny — that distinction matters immensely. We don’t need fast fiction. We need evidence-based insights.

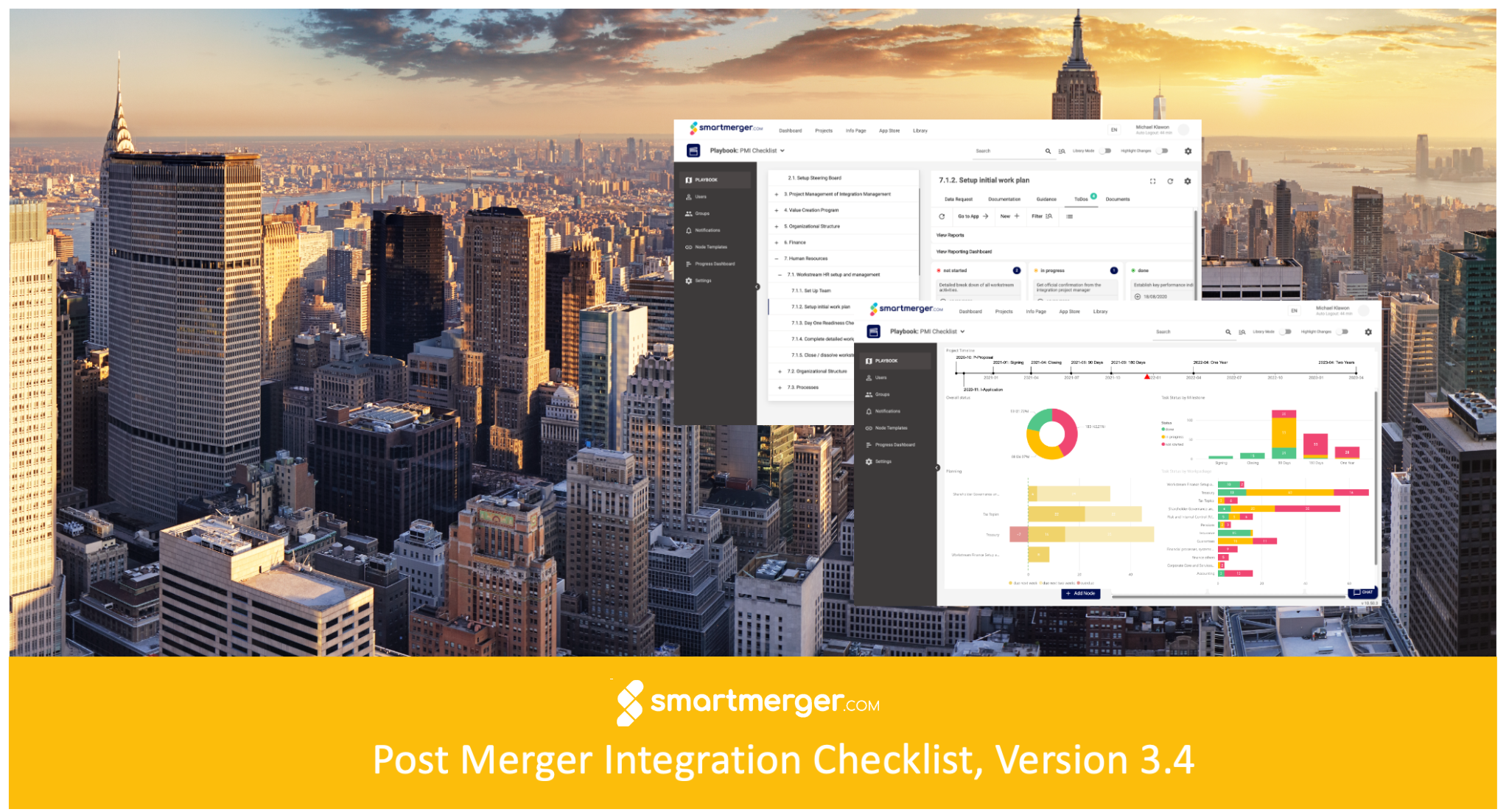

At smartmerger.com, we believe AI is only transformative when it’s applied responsibly. That’s why our platform:

- Builds on structured, validated deal data

- Applies this structured data as the primary knowledge base for AI,

- Prioritizes internal knowledge as the primary AI input,

- Implements rigorous human verification workflows,

- And ensures that AI outputs are not just fast — but auditable, authorized, and actionable.

We design AI to work with professionals, not to replace their judgment.

That said, this is only the status quo. The AI landscape is evolving at a breakneck pace. We’re now entering the era of autonomous AI agents — tools that don’t just answer questions but take action on your behalf. Their potential in M&A is immense: orchestrating workflows, simulating deal scenarios, even coordinating end-to-end due diligence cycles.

But with that potential comes responsibility — to govern, validate, and guide AI systems with the same diligence we apply to deals themselves.

🟢 Stay tuned.

We’re tracking every advancement and continue to integrate what’s useful, safe, and valuable for the M&A world. We’ll keep you updated as we push the boundaries — always grounded in trust, transparency, and transactional reality.

Because in M&A, the right answer isn’t just valuable — it’s everything.

See what Next Generation M&A looks like in action. Email hello@smartmerger.com to book your personalized walkthrough.